Everything You Need To Know About Sensor Fusion For Autonomous Driving

Hello guys, welcome back to our blog. In this article, we will discuss sensor fusion for autonomous driving, the types of sensors used in autonomous vehicles, and the algorithms and techniques used in sensor fusion.

Ask questions if you have any electrical, electronics, or computer science doubts. You can also catch me on Instagram – CS Electrical & Electronics

- Types Of Errors Found In MiL, SiL, And HiL Testing

- Top 100 C Coding-based “Predict The Output” Interview Questions With Explanations

- Automotive Ethernet: The Future Of In-Vehicle Communication

Sensor Fusion For Autonomous Driving

The evolution of autonomous vehicles (AVs) is revolutionizing the transportation landscape. Central to their safe and efficient operation is sensor fusion, a technique that integrates data from multiple sensors to perceive the environment more accurately than any single sensor could alone. This comprehensive guide explores the principles, technologies, methodologies, and future trends of sensor fusion in autonomous driving.

What is Sensor Fusion?

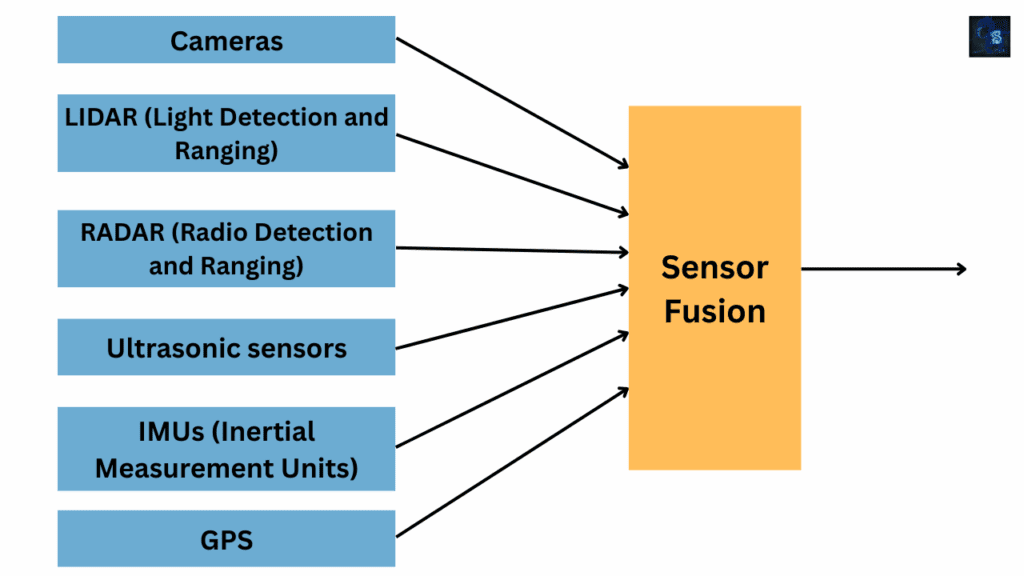

Sensor fusion refers to the process of combining sensory data from disparate sources, such as LiDAR, radar, cameras, GPS, and inertial measurement units (IMUs), to produce more consistent, accurate, and dependable information. In AVs, sensor fusion enables the vehicle to create a detailed 360-degree view of its surroundings, enhancing decision-making and driving capabilities.

Why Sensor Fusion is Crucial

- Redundancy and Reliability: Combining multiple perspectives for increased reliability.

- Environmental Adaptation: Compensates for the weaknesses of individual sensors under different conditions.

- Real-Time Processing: Facilitates immediate response to dynamic environments.

- Accurate Localization: Enhances vehicle positioning and trajectory planning.

Types of Sensors Used in Autonomous Vehicles

01. LiDAR (Light Detection and Ranging)

- Function: Measures distance using laser light reflections.

- Pros: High-resolution 3D mapping.

- Cons: Expensive, affected by weather conditions.

02. Radar (Radio Detection and Ranging)

- Function: Uses radio waves to detect object distance and speed.

- Pros: Robust in adverse weather, long-range detection.

- Cons: Lower spatial resolution.

03. Cameras

- Function: Capture images for object classification and lane detection.

- Pros: Rich semantic information.

- Cons: Limited performance in low light or bad weather.

04. Ultrasonic Sensors

- Function: Detect close-range obstacles.

- Pros: Ideal for parking and low-speed navigation.

- Cons: Limited range and resolution.

05. GPS and IMU

- Function: Provide geolocation and motion data.

- Pros: Essential for localization and navigation.

- Cons: The GPS signal can be blocked in tunnels or urban canyons.

Levels of Sensor Fusion

Sensor fusion can occur at different stages of the perception process:

01. Low-Level (Raw Data) Fusion: Combines raw data from multiple sensors. Offers the highest accuracy but requires significant computational power.

02. Mid-Level (Feature-Level) Fusion: Extract features (e.g., edges, corners) before combining. Balances performance and complexity.

03. High-Level (Decision-Level) Fusion: Fuses the final outputs or decisions from each sensor. Easier to implement but less precise.

Sensor Fusion Architectures

01. Centralized Architecture: All raw sensor data is transmitted to a central processor. Allows optimal fusion but can lead to bottlenecks.

02. Distributed Architecture: Sensors process data locally before sending it to a central unit. Reduces bandwidth but may result in data loss.

03. Hybrid Architecture: Combines both centralized and distributed approaches. Balances efficiency and accuracy.

Algorithms and Techniques in Sensor Fusion

01. Kalman Filter

- Optimal for linear systems with Gaussian noise.

- Used for state estimation and sensor tracking.

02. Extended Kalman Filter (EKF)

- Handles non-linear systems by linearizing around the current estimate.

03. Unscented Kalman Filter (UKF)

- Provides better accuracy than EKF for highly nonlinear systems.

04. Particle Filter

- Uses a set of particles to represent the distribution.

- Effective for non-linear, non-Gaussian problems.

05. Deep Learning-Based Fusion

- Neural networks for high-level semantic fusion.

- Requires large datasets and high computation.

06. Bayesian Networks

- Probabilistic graphical models to handle uncertainty.

Applications of Sensor Fusion in AVs

- Object Detection and Tracking: Accurately identifying and monitoring nearby objects.

- Lane Keeping and Road Edge Detection: Integrating camera and LiDAR data for precise navigation.

- Localization and Mapping: Combining GPS, IMU, and LiDAR to determine vehicle position.

- Decision-Making and Path Planning: Using fused data to make safe and efficient driving decisions.

- Collision Avoidance: Real-time obstacle detection and evasion.

Challenges in Sensor Fusion

- Data Synchronization: Ensuring time-aligned data from different sensors.

- Sensor Calibration: Aligning coordinate systems and maintaining accuracy.

- Environmental Factors: Rain, fog, and lighting affect sensor performance.

- Computational Load: Real-time processing demands high-performance hardware.

- Cost and Integration: Balancing performance with affordability.

Case Studies and Real-World Implementations

01. Tesla: Primarily relies on cameras and neural nets. Uses radar selectively.

02. Waymo: Utilizes a combination of LiDAR, radar, and cameras for high-resolution mapping.

03. Mobileye: Camera-first approach with advanced AI for perception and decision-making.

Future Trends in Sensor Fusion

- Edge AI and Real-Time Processing: Improved processors for onboard fusion.

- V2X Communication: Fusion with external data sources like infrastructure.

- Quantum Computing: Potential future application for real-time fusion.

- Enhanced AI Models: More robust deep learning models for perception.

- Sensor Miniaturization: Compact and more efficient sensors.

Conclusion

Sensor fusion is the cornerstone of autonomous driving technology. It bridges the gap between perception and decision-making by synthesizing diverse data into actionable insights. As AVs become more prevalent, advancements in sensor fusion algorithms, hardware, and integration strategies will play a pivotal role in shaping the future of mobility.

This guide serves as a foundation for understanding the intricate yet fascinating world of sensor fusion in autonomous vehicles. Whether you’re a student, engineer, or enthusiast, mastering this topic opens the door to the next generation of intelligent transportation systems.

This was about “Everything You Need To Know About Sensor Fusion For Autonomous Driving“. Thank you for reading.

Also, read:

- 100 (AI) Artificial Intelligence Applications In The Automotive Industry

- 2024 Is About To End, Let’s Recall Electric Vehicles Launched In 2024

- 50 Advanced Level Interview Questions On CAPL Scripting

- 7 Ways EV Batteries Stay Safe From Thermal Runaway

- 8 Reasons Why EVs Can’t Fully Replace ICE Vehicles in India

- A Complete Guide To FlexRay Automotive Protocol

- Adaptive AUTOSAR Vs Classic AUTOSAR: Which One For Future Vehicles?

- Advanced Driver Assistance Systems (ADAS): How To Become An Expert In This Growing Field