What Is Processing Workloads, Batch, Transactional Processing

Hello guys, welcome back to our blog. In this article, we will discuss what is processing workloads, what is batch processing, what is transactional processing, and we will also discuss the working of both modes.

If you have any electrical, electronics, and computer science doubts, then ask questions. You can also catch me on Instagram – CS Electrical & Electronics.

Also, read:

- What Is Competitive Programming, Tips To Practice Competitive Coding?

- Top 10 Websites To Learn Coding, How To Learn Coding, Programming.

- Roadmap To Become A Big Data And Analytics Engineer.

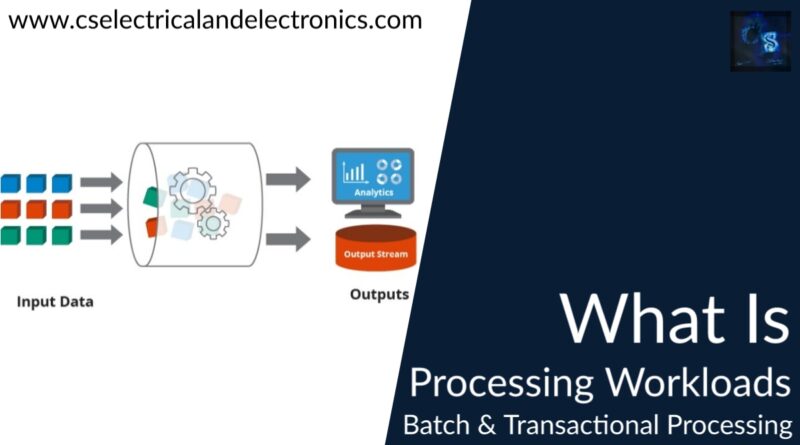

What Is Processing Workloads

A processing workload in Big Data and analytics is said as the amount and nature of information or data that is processed within some amount of time. Processing workloads are divided into two types:

- Batch

- Transactional

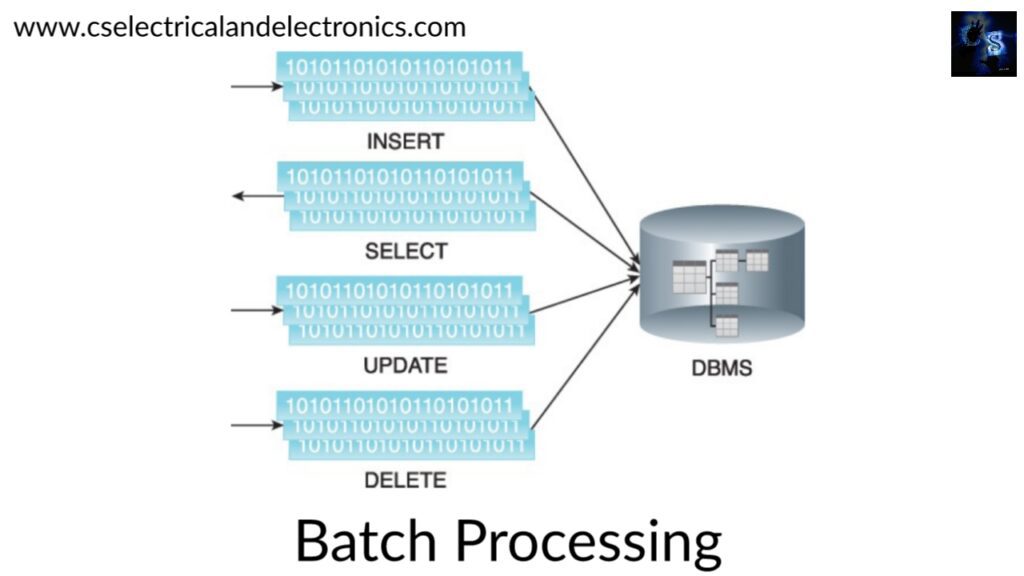

Batch Processing

Batch processing is also called offline processing. Processing data in batches normally imposes delays which in turn occur in high-latency responses. Batch workloads involve huge quantities of data with sequential read/writes and comprise groups of reading or write queries.

Queries can be very complex and involve multiple joins. OLAP systems normally process workloads in batches. Strategic BI and analytics are batch-oriented. Highly read-intensive tasks involving huge volumes of data. Batch workload comprises grouped read/writes.

The batch workload can contain grouped read/writes to INSERT.

Data is processed offline mode in batches and the response time will vary from minutes – hours. Data should be persisted to the disk before it is been processed. Batch mode generally involves processing a range of huge datasets.

Addressing the variety and volume characteristics of Big Data datasets. It is relatively very simple, easy to set up, and cheap compared to real-time mode. Strategic BI, predictive and prescriptive analytics and ETL operations are batch-oriented.

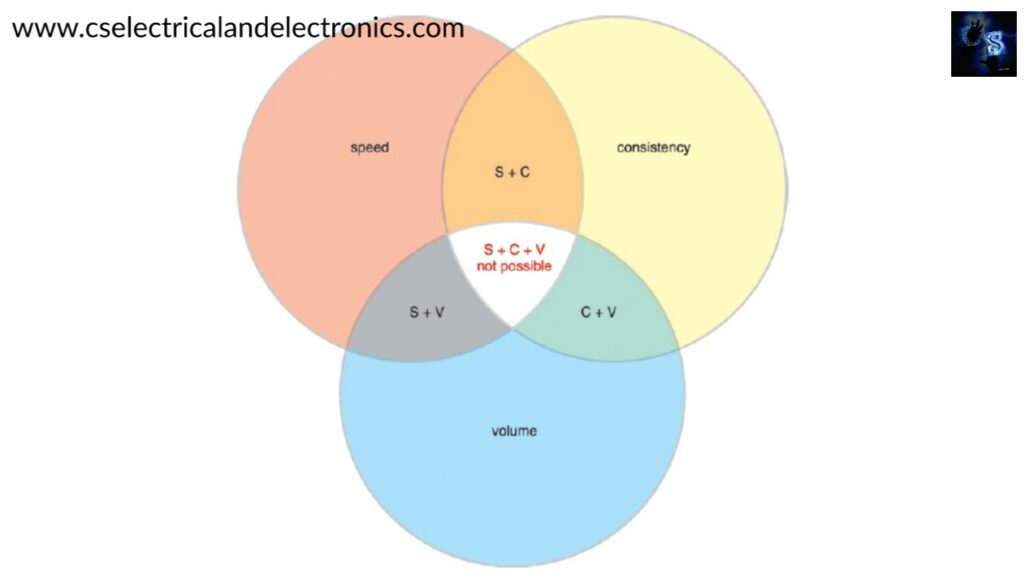

The principle is related to distributed data processing

Speed – Speed refers to how fast the data can be processed once it is produced. In the case of real-time analytics, data is processed faster than in batch analytics. This normally excludes the time taken to capture data and focuses only on the actual data processing, such as producing statistics or executing an algorithm.

Consistency – Consistency refers to the accuracy and precision of the results. Outcomes are deemed accurate if they are close to the correct value and precise if close to each other. A more consistent system will make use of all present data, resulting in greater accuracy and precision as compared to a less consistent system that makes use of sampling techniques, which can result in lower accuracy with an acceptable level of precision.

Volume – Volume refers to the huge amount of data that can be processed. Big Data’s velocity characteristic results in fast-growing datasets leading to larger volumes of data that are required to be processed in a distributed manner. Processing such voluminous data in its entirety while considering speed and consistency is not possible.

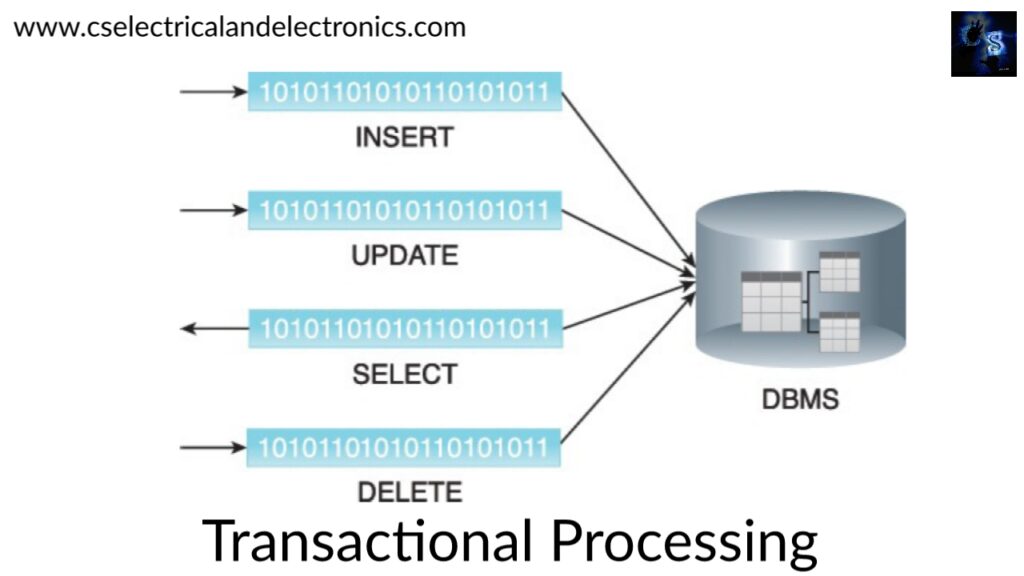

Transactional Processing

Transactional processing is also called online processing. Data is processed interactively without delay occurring in low-latency responses. Transaction workloads involve small amounts of data with random reads and write. OLTP and operational systems, which are normally write-intensive.

Containing a mix of reading/write queries, they are basically more write-intensive than read-intensive. Transactional workloads comprise random reads/writes. Involve lesser joins than business intelligence and reporting workloads.

Transactional workloads have few joins and lower latency responses

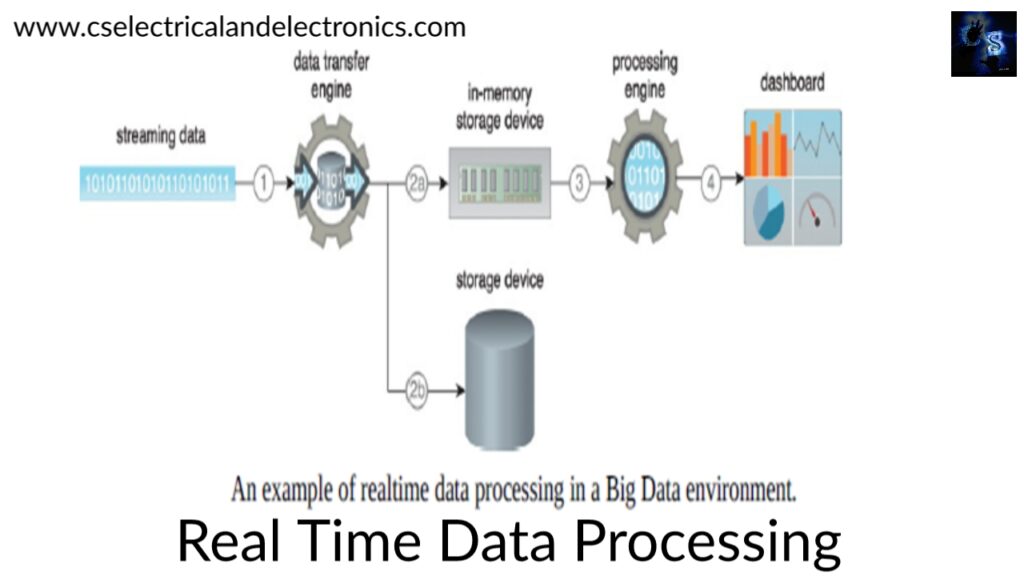

Processing in Realtime Mode

Here the data is processed in memory as it is captured before being persisted on the disk. Response time normally ranges from a sub-second to under a minute. Realtime mode addresses the velocity characteristic of Big Data datasets.

Realtime processing is also known as event or stream processing as the data either arrives continuously (stream) or at intervals (event). Individual event/stream datum is normally small in size, but its continuous nature results in huge large datasets.

The interactive mode comes within the category of real-time. Interactive mode normally refers to query processing in real time. Operational BI/analytics is basically conducted in real-time mode.

This was about “What Is Processing Workloads“. I hope this article “Processing Workloads” may help you all a lot. Thank you for reading.

Also, read:

- 100+ C Programming Projects With Source Code, Coding Projects Ideas

- 1000+ Interview Questions On Java, Java Interview Questions, Freshers

- App Developers, Skills, Job Profiles, Scope, Companies, Salary

- Applications Of Artificial Intelligence (AI) In Renewable Energy

- Applications Of Artificial Intelligence, AI Applications, What Is AI

- Applications Of Data Structures And Algorithms In The Real World

- Array Operations In Data Structure And Algorithms Using C Programming

- Artificial Intelligence Scope, Companies, Salary, Roles, Jobs

Author Profile

- Chetu

- Interest's ~ Engineering | Entrepreneurship | Politics | History | Travelling | Content Writing | Technology | Cooking

Latest entries

All PostsApril 29, 2024Top 11 Free Courses On Battery For Engineers With Documents

All PostsApril 29, 2024Top 11 Free Courses On Battery For Engineers With Documents All PostsApril 19, 2024What Is Vector CANoe Tool, Why It Is Used In The Automotive Industry

All PostsApril 19, 2024What Is Vector CANoe Tool, Why It Is Used In The Automotive Industry All PostsApril 13, 2024What Is TCM, Transmission Control Module, Working, Purpose,

All PostsApril 13, 2024What Is TCM, Transmission Control Module, Working, Purpose, All PostsApril 12, 2024Top 100 HiL hardware in loop Interview Questions With Answers For Engineers

All PostsApril 12, 2024Top 100 HiL hardware in loop Interview Questions With Answers For Engineers